Welcome

Welcome to Dare to Discuss, a bi-monthly event for readers, reviewers, and bloggers to have in-depth discussions about books. Anyone and everyone is welcome to participate and join the discussion. But first, here’s a few rules.

Rules

-

Be polite. All opinions are welcome in this discussion and contrasting viewpoints are encouraged, but be respectful and polite. This discussion is about the book. Check your personal vendettas at the door. Thank you.

-

Feel free to link to your own review of the book in the comments, but please keep the discussion here. That way everyone can join in!

The Book

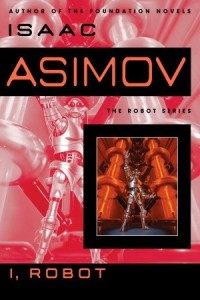

The three laws of Robotics:

1) A robot may not injure a human being or, through inaction, allow a human being to come to harm

2) A robot must obey orders give in to it by human beings except where such orders would conflict with the First Law.

3) A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

With these three, simple directives, Isaac Asimov changed our perception of robots forever when he formulated the laws governing their behavior. In I, Robot, Asimov chronicles the development of the robot through a series of interlinked stories: from its primitive origins in the present to its ultimate perfection in the not-so-distant future–a future in which humanity itself may be rendered obsolete.

Here are stories of robots gone mad, of mind-read robots, and robots with a sense of humor. Of robot politicians, and robots who secretly run the world–all told with the dramatic blend of science fact and science fiction that has become Asmiov’s trademark.

The Reviews

David @ The Scary Reviews says:

What happens when robots become so advanced that we can’t distinguish them from a human being? I Robot was so far ahead of its time it’s scary. We haven’t arrived in this possible future yet but when we do I hope we don’t forget the three rules that Issac Asimov invented. I’m sure if we make robots this advanced we are in for a world of hurt. [Full Review Here]

Melanie @ MNBernard Books says:

Frankly, I’m not sure how I feel about this book because it was interesting, but maddeningly confusing. Each of the experiences with robots was unique and offered a new look on robotic technology and how they would be important and how they would evolve in future society. At the same time, there was so much technological terminology that I just felt like I was fumbling around in the dark most of the time. [Full Review Here]

Lilyn @ Scifi & Scary says:

Isaac Asimov’s I, Robot deserves it’s place in the Hallowed Halls of Classic Science Fiction. This collection of short stories, which showcases the development of artificial intelligence, is exquisitely well-crafted. I can only imagine how groundbreaking these piece must have been when they were written. Even though AI hasn’t taken the exact steps that Asimov lays out, it’s still a near prophetic look at it’s development. From the robot nanny most of us had not heard about, to the deceptive robot everyone knows from the Will Smith I, Robot, it’s a believable evolution of robotics. [Full Review Here]

The Discussion

Please note that spoilers are acceptable and likely to happen! You’re encouraged to ask your own questions about the book for other discussioners, but in case you don’t have any, I’ve listed a few below to get the ball rolling.

-

How far away do you think we are from the creation of AI that can pass for ‘real’?

-

What do you think influenced Asimov to come up with his 3 laws of robotics to begin with?

-

Where is the line crossed from artificial intelligence to the creation of a new life form? Will we be able to tell when we cross it?

-

What defines artificial versus natural intelligence? In 100 years, will we be able to tell?

Welcome, everyone. For those of you who have read I Robot, what was your impression of this classic Sci-fi story?

LikeLike

I think out of all of us its safe to say I’m the one who loved it. *shifty*

LikeLike

Yay! D2D! ^.^ I am actually really excited about this topic as a scientist (though perhaps not in this field), and I’m actually going to an AI talk tomorrow. Woo! Anyway! For your first question:

Honestly, I don’t think we’re that far off from artificial intelligence. A lot goes on behind the scenes of the science world and not all of that is made known to the public, especially when the research is still ongoing. That being said, one area of technology where we may start to see AI first I think is going to be self-driving cars. It’s a popular topic, after all. They’re already talking about possibly having things like Uber and other taxi forms of driving being made automatic. They are even starting to test self-driving cars in some cities. The question is: Is this AI? And if so, how do we feel about AI driving our cars for us?

LikeLiked by 1 person

I don’t think self driving cars count as AI. The car is programmed with parameters for the various tasks. To me AI would involve a component of thinking and problem solving. But I agree that AI isn’t far off.

LikeLike

In that case, if AI will not be used for self-driving cars. What do you think will be the first application of AI?

LikeLike

When I think about AI, I think the computer ‘Watson’ the was featured on Jeopardy would be the first application. Maybe I’m way off on this topic! You probably know more about it than I do. Your discussion tomorrow on AI sounds very cool.

LikeLiked by 2 people

Okay. I had to look that computer up, but that computer isn’t true AI. It sounds like it was only able to answer questions and only when they were asked in certain ways. That’s very limiting. But you’re saying you think AI would initially be presented in the form of computers. To what end? Science utilization? Answering questions? Would the first AI just be used for a game show? :p

LikeLike

I think cars may unintentionally an early venture into AI — perhaps through delivery trucks, taxis, or especially military applications like drones. Which is kind of “Terminator” territory, but when have we ever paid a lot of attention to warnings in fiction?

Maybe more likely would be finance or government. I could easily see AI in demand for trading stocks and bonds intelligently. There is already a “robotic” lawyer offering solutions to traffic violation tickets — the law is ridiculously complex and self-contradictory in many areas. I could easily see a demand for AI lawyers — though again, we might not like what comes of that. But if they work well, the next step is judges, perhaps even higher officials. As assistants to humans, of course… but give them a century to become old hat and they’ll be running the show while humans put their feet up, I think.

LikeLike

That’s so cool that you get to talk to an AI tomorrow!!

I don’t think we’re far from it myself. Like you said, lots of research going on, not that much being revealed to the public. But even with that I still remember reading some articles that reveal we’re fairly far advanced with just what the general public knows.

I guess self-driving cars would be considered AI. I like the idea, and I don’t. On one hand : Yay, car can drive, I can read!! BUT: If the car’s 1st law is to avoid harm for me, what if it decides that the best way to avoid the most harm for me is to do something which results in the harm of someone else? I don’t think I would fully trust it. Not only for that, but just for the fact that people drive so ILLOGICALLY on the road, I can’t see a machine that thinks linearly being able to succeed.

Wasn’t there just a thing not too long ago about a self-driving car getting rear-ended?

LikeLiked by 2 people

Lilyn — I wouldn’t be thrilled, but I’d be more than happy to have my car with me alone in it steer off a cliff to avoid killing a busload of children. In fact, I’d like to think that any of us would make that choice ourselves. Because it would be the right thing to do. The “trolley car” problem is much overstated — with faster reactions unmuddied by human glandular panic, the chances of AI or plain automation averting harm in an emergency are just plain better than human.

LikeLiked by 1 person

I’d much rather the car sacrificed me than the kids, too, but would the car make that choice? What do you program the car with? “Keep driver safe”? “Keep all beings on road safe?” Where do you hit that line where you ensure the car is able to function without being overloaded with processing delays?

LikeLike

I think you’re right – logically. I mean, I know that it’s probably case. But knowing something with your brain and KNOWING something instinctively and in a way that you won’t freak out about at least a little bit – can sometimes be 2 different things.

LikeLiked by 1 person

Agreed. We will never be comfortable with it, because we grew up bossing the cars around.

Our grandkids or their children, though — it will be normal to them. Just like we’re fine with push-button elevators but our forebears who got used to human-controlled ones in the early 20th century could never quite trust those pushbuttons.

LikeLiked by 1 person

Oh god. We’re going to be the old people that young people laugh at for their antiquated beliefs *sigh*

LikeLiked by 1 person

I’m good with that, haha!

LikeLike

It’s the ciiiiiiiiiirrrrrcle of liiiiiife!

LikeLiked by 1 person

Haha! I’m not talking TO the AI. I’m going to a talk ABOUT AI. :p But it should be pretty interesting. Perhaps I’ll come back with little tidbits. (P.s. it’s on self-driving cars, which is where the car part came from.)

Myself, I’m quite adverse to the idea of self-driving cars. I don’t trust technology because technology is designed by humans and humans are flawed. Thus the AI would have to be driving the car, but then AI would eventually figure out that humans are a plague unto themselves and the only way to save humanity is to kill it. So… I don’t trust self-driving cars or AI. (that and I would grow so impatient if there was traffic. I’d probably just put on manual anyway. :p)

And I agree with you. AI would only react the way it thinks is best, but since it has no emotional attachment to the people at stake, it can only think in the logical pattern. Thus, it would likely save the most number of people possible, while humans might save certain people over others. Sometimes logic doesn’t match human morals or judgement.

I hadn’t heard about this rear-ending bit. When was this?

LikeLiked by 2 people

Its apparently happened more than once. http://fortune.com/2015/07/17/google-self-driving-car-crash/

Given what you said about AI wiping humans out – how do you feel about the Terminator movies? 🙂

LikeLiked by 1 person

See? SEE?! Bad self-driving car. Ain’t no way you’ll ever get me in one. Driving might suck (especially the 14hour drive home to visit family), but I would rather sit there and suffer the whole way than allow technology to drive for me. I don’t trust it. Nope. Nope. Nope.

As for Terminator, it was a bit before my time. I only know the basic premise. So, I’m not sure I can really comment on them. (I fell asleep while watching one a few months back. oops.) Wasn’t it like… AI sent back in time to kill people in order to change the future or something?

(I fell asleep while watching one a few months back. oops.) Wasn’t it like… AI sent back in time to kill people in order to change the future or something?

LikeLiked by 2 people

AI decided that humanity needed to be destroyed, humans tried to stop it from happening.

LikeLiked by 1 person

Ah. So the exact reason why AI is bad in my mind: it’s ability to realize that humanity is a plague unto itself (despite what modern-day humans lead themselves to believe.) AI is great in theory. It could be used for amazing things and applied to better understand science, maybe even politics, but inevitably it will realize the flaws that make humans human. AI will become the dominant species and likely try to wipe us out.

LikeLike

I would love a self driving car. I could read during the ride! As for the Terminator that is a great example of AI. Too bad it was before you’re time. 🙂

LikeLiked by 1 person

Haha! I’m sorry. I’m such a baby in the blogger world. :p My brother did his best to educate me on old tv and movies, but he only made it so far.

And I would love to read while riding in the car. Or catch up on work. Or blog. Or gosh… anything other than drive! But I can barely trust my phone to be accurate some days. How could I trust a car with my life?

LikeLike

I kinda agree. I can hardly trust my computer not to crash. The self driving car better not operate with any version of windows software!

LikeLiked by 1 person

Exactly! So who would design the car software? And if it is true AI, at what point does the company no longer own the software? At what point do you no longer own the car? What if the car becomes autonomous AI? What if it becomes ‘new life’? What then? You still want AI driving your car? How about when it decides it wants to see the Grand Canyon and just drives off when you’re trying to get to work? 0.0

LikeLiked by 1 person

Gosh, now I don’t want a self driving car if it would have that much control.

LikeLiked by 1 person

Ha! Win! I have corrupted another soul to my war on AI! Mwahahaha!

LikeLiked by 1 person

Melanie, that’s an interesting insight for sure. Autonomous self-driving cars are probably going to be the workshop for AI development for a couple of reasons:

One, we’re going to want as many self-driving cars as we currently have non-self-driving cars. The demand is huge, and I think within twenty years there are going to be MILLIONS of self-driving cars digging up bugs in autonomy programs, and people fixing them all the time. That’s a BIG laboratory.

Two, AI may not be the deliberate goal of autonomous self-driving cars, but they’re the ultimate consumer desire. Demand will push toward AI. People can deal with bringing up Google Maps and pointing out a destination, but what they REALLY want is to get in the car and say “I want to eat at a good Mexican restaurant” and have the car figure out what they’ll like from past evidence.

People may fear AI — but they also want it. Whether they realize or not.

LikeLiked by 1 person

Oh no doubt. The industry for building self-driving cars, selling self-driving cars, and then repairing and upgrading self-driving cars would be immense. While the cost for repairs may decrease because it’s possible that with AI driving the car there might be fewer accidents. Thus saving the auto companies thousands or millions on repairs and construction and labor. Though, in that sense, many of the current manual labor jobs may switch to becoming tech jobs. And what if those tech jobs were given to other AI that build the self-driving cars? What happens to humans then? We have to make sure that with new technology we don’t start booting out human jobs and causing an even more massive unemployment issue like we saw when a lot of car production lines went to machinery over the years. (I came from a car factory area [Michigan] and the number of workers who lost jobs is a big topic there.)

Huh. So you think the self-driving car will be required to have AI in order to ‘please’ the driver. To make decisions for them and pick destinations. The convenience would be even greater than just self-driving. That’s… interesting, but how would the AI know what a good Mexican restaurant is if it doesn’t have tastebuds? Or are we presuming the car has 3G and can auto-access YELP reviews or something. Can it read those reviews and ascertain from the text, not just the star rating, whether the restaurant is going to be good or not??

(I do not want AI. I’d rather do things the hard way than deal with something so unknown and untested, especially when fiction constantly tells us the dangers of it.)

LikeLike

I would love the ability to let a car find places for me, but just in terms of fancier google mapping. Like: I pull up the map, it drives to it. But I wouldn’t want it trying to make suggestions for me!

Heck, I get ticked when Goodreads suggests reads for me, or Netflix suggests movies!

LikeLiked by 1 person

Haha! Yeah, the car doesn’t know you, Lilyn! It doesn’t know what you want! Stupid car! :p

LikeLiked by 2 people

Just like Google integrates a huge amount of personal information, I think self-driving cars will integrate all kinds of personal information. Google already, for example, has an opt-in for allowing autocorrect on a Google keyboard Android smartphone to read your email archive to pick out the words you’re likely to use to improve predictive text.

It’d be nothing for a car to figure in Yelp reviews, prioritize your Yelp reviews, figure in your search history, your Facebook likes, restaurants you’ve visited in the past, recipes you’ve looked up on Google…

…the possibilities are endless, and mostly already in place.

There’s a reason, for example, when my wife and I had our now-5-year-old we suddenly started getting Babies ‘R’ Us circulars and solicitations to sign up for that Gerber life insurance for kids thing. Our preferences and life events are already being plugged into databases automatically for advertisers and their algorithms to use.

The youngest generations are already used to this. It’s like water, and they’re fish.

LikeLiked by 1 person

So… what you’re saying is that the self-driving car now officially has my personality profile and all my likes and dislikes. Basically it’s like a peak into my psyche for anyone who decides to break into my car. aka hacking laptops is soooo 2000s. Now it’s hacking self-driving cars to learn everything you can about someone. -.-

*doesn’t wanna be a fish*

LikeLiked by 1 person

I totally forgot all about someone hacking the AI. That is a whole new set of problems! I’m sure a car could be hacked easily enough considering computers can.

LikeLiked by 1 person

But if it’s AI, wouldn’t it be able to understand it’s being hacked and, because it’s learns as it goes, wouldn’t it be able to out-hack any human hacker? But what if we had autonomous AI that saw a logical reason to hack another AI? AI hack battle? o.O *copyrights new TV series*

LikeLiked by 1 person

You need to check out the show Person of Interest (old show not on anymore). They had an episode of a car that was hacked. It will make you question everything that we believe is possible with computers. The premise is a computer program is an AI and can identify criminals. Very interesting series. This was a great example of AI on TV in the early 2000’s

LikeLiked by 1 person

Hmm… AI able to identify criminals. What were the parameters? How high were the standards? What was considered ‘criminal’? Speeding is a crime. Would the AI seek those people out? o.O

LikeLike

In the series the AI was controlled by the admin. The AI sent them the ‘person’/’criminals’ information to the admin. The parameters or standards is an interesting question. It is along the lines of Minority Report. That is a scary and very interesting concept.

LikeLiked by 1 person

Huh… Minority. Do you think AI could be racist? o.O

LikeLike

It didn’t come up in the movie version… but I’m afraid it would if we went down that road some day.

LikeLiked by 1 person

I’m curious because AI bases its decisions upon logic and I’m not sure logic would be racist. Unless it uses statistical analysis for whatever it’s doing and sees a trend? But if we didn’t offer racial information, it wouldn’t be able to find any trend. *is seriously overthinking this now*

LikeLiked by 1 person

Exactly. It’s like being online today, only more so.

To be fair, I think the “Internet of Things” will give WAY more away about us than just self-driving cars by themselves. The fridge is going to tell everyone what your favorite salad dressing is, and whether or not you eat cake at 3 AM…

LikeLiked by 1 person

Oh! Well… good thing I don’t use my fridge for much. *glances at the cupboard* Don’t you DARE start talking! *threatens with butter knife*

(now I’m just being facetious >.>)

LikeLiked by 1 person

I don’t think of Watson as being an AI – isn’t he just really fast at processing a question and finding a known answer? Google does that. Doesn’t make google an AI. Ya know?

LikeLiked by 1 person

That’s my point! ^.^

LikeLiked by 1 person

I’ll throw out an answer to one of the starter questions — what influenced Asimov to come up with the three laws of robotics?

I think he tells us clearly in the second to last story of the collection, “Evidence”. They are “the essential guiding principles of a good many of the world’s ethical systems.” They’re Asimov’s attempt to reduce to a minimal, bedrock form the Ten Commandments, the Golden Rule, and whatever else is supposed to keep us playing nice with each other.

And then he goes and writes a collection revolving around ways they can be subverted. Read into that what you will — I read politics and history, and hope leavened with a bit of cynicism.

LikeLike

I didn’t even think about that. I guess I thought that he put the three laws of robotics into place because he saw the potential for harm in having large powerful machines having free will. It didn’t even really occur to me that the stories could be thought of as ways to subvert them.

The tests that the researchers had to put all the robots through to find out which one had been given a directive to ‘lose yourself’ was interesting and thought provoking, though.

LikeLike

Ah. New life. Well, that depends on your definition of ‘life’ now doesn’t it? Can life be something that lives inside technology? If no, then ‘living’ AI would be required to have an organic body, which I’m not sure we’re at this stage in science yet.

However, if life is defined as something that can think for itself… Well, does AI think for itself? Or does it still follow the programs and trajectory of the creator? At one point does it become autonomous? At what point does it diverge from it’s original programming? Is that when it becomes new life or is it new life from the beginning and if there is a fine line, how does one ascertain that line? Will be a certain ‘age’ of the AI? Will it be by human-designed parameters?

Of course, if you come at this question from the religiously point of view as many people will, the answer is likely ‘no.’ Because in their minds life cannot be created, but born. Of course, the religious people will also likely be opposed to the idea of AI and life in general, but who knows. Maybe they’d surprise us all and say the AI is alive and, as such, deserves the same rights.

Oh gosh… human vs AI rights debate. *headdesk*

LikeLike

Have you seen Ex Machina?

I had a think on what the definition of life would be, and I don’t think it’s as much ‘life’ as it is ‘soul/sense of self’. For me, having a computer develop a sense of humor would be a sign of soul/sense of self. I’m reading The Long Way to a Small Angry Planet right now, and the AI in it – Lovey – is definitely ‘alive’ in my mind.

LikeLiked by 1 person

That robot in Ex Machina is a great example of AI too.

LikeLiked by 1 person

Oh no! I haven’t seen it yet, but I really want to. I am always behind on movies and never watch them when they’re out and then don’t know where to find them. *sigh* (I miss movie rental places.)

So you think humor would be a defining factor of ‘life’? But what if the computer is simply able to understand humor and apply it? Would it take a new joke or perhaps sarcasm to be considered ‘alive’? I’m curious about this humor aspect. I hadn’t thought of it before.

LikeLiked by 2 people

I think sarcasm would be a perfect example of ‘alive’. Or maybe demonstrating selfISHness. Because I think the 3 laws of robots are something that we would put into place, so for a computer to be able to simply refuse to do something because it wants to do something else?

LikeLiked by 1 person

Oh! I see what you’re saying. Like find a loop hole in rule #2? Obeying an order as it’s obligated, but finding a means to show ‘personality’ and defiance while doing so, yes?

LikeLiked by 1 person

Or outright refusing to perform the order.

LikeLiked by 1 person

Wouldn’t that be breaking the robot code then? Wouldn’t we consider it flawed? What would be the point of having the second law if it were designed to be broken to show autonomy or would that be the purpose of the second law?

LikeLiked by 1 person

Yeah, it would be breaking robot code/laws, but…don’t humans do that all the time? When teengers act up, act out, and generally act like heathens, don’t we say “Ah, well, it’s part of growing up.”?

We have the laws in place for a reason, but we can’t even expect unquestioning obedience from humans, so to expect that robots would NEVER be able to at least try to circumvent it?

LikeLiked by 1 person

So maybe that would be the standard for AI: the ability to break law two. When a robot/technology/program chooses to stop listening to commands, it has taken on autonomy and perhaps ‘life’? Because doesn’t refusal in a way represent personality?

LikeLiked by 2 people

Exactly.

LikeLiked by 1 person

That’s when the terminators come to hunt us down! They would no longer listen to us and decide what they do, not what we want them to do.

LikeLike

That’s a good point. I think the ability to understand humor would be a definite quality needed to be AI. Understanding sarcasm would even be more impressive

LikeLiked by 1 person

I think the key here is the utilization of humor and sarcasm. After all, one may understand a technique, but until one puts it into use, can they really understand it?

LikeLiked by 1 person

Good question.

LikeLike

That observation has been a pretty popular one in science fiction for a while — think Data in Star Trek TNG. And I agree, I think we’ll know we’ve come up with a true AI when it laughs on its own without us telling it to.

LikeLiked by 1 person

Though that kind of brings up an interesting point that just occurred to me — nowhere in I, Robot does a Robot laugh or exhibit a sense of humor.

Just part of the ‘robots are logical’ trope, or something else on Asimov’s mind? I tend toward the first option, but… hmmmm.

LikeLiked by 1 person

You’re right. Hm. I remember the one robot showing pity, but never really laughing.

LikeLike

I gotta ask: What did y’all think of the robot nanny? I felt like it was very “Frankenstein’ and creepy. But then I also cracked up because I just recently watched a short film about Robot Mommies. The iMommy.

LikeLike

I liked the robot nanny, it didn’t feel creepy at all. But could we really trust it to do as we tell it to?

LikeLiked by 1 person

Well, a robot’s not going to panic if your kid gets hurt and needs First Aid, so one hand, they’re kind of perfect. But to be able to interact in a playful manner with children instead of being just a looming presence, it would have to be able to become childlike in some ways. That might make it it easy to manipulate…

LikeLiked by 1 person

Great point!

LikeLiked by 1 person

Maybe a bit creepy, especially as the story was written with it being nonverbal.

Robot nannies are another thing I think are basically inevitable at this point — there are already kid trackers via GPS, and online versions to track what websites kids visit.

But a nanny that takes care of all the menial stuff, and could be loaded with instructional software to teach children as well — if such a thing became popular it might even make teachers obsolete. The future would be left with professors, and maybe as many curriculum designers as there are school administrators now.

LikeLiked by 2 people

Hmm. I hope they don’t become inevitable. What would that do to our kid’s minds? I mean the social interaction thing? Would kids become more used to robots than interacting with people?

LikeLiked by 1 person

They are more used to their cell phones than people already. Have you seen how they text each other instead of talking!

LikeLiked by 1 person

LOL. True

LikeLiked by 1 person